| Name | Modified | Size | Downloads / Week |

|---|---|---|---|

| Parent folder | |||

| yolov5s-VOC.pt | 2021-07-19 | 14.5 MB | |

| README.md | 2021-04-11 | 49.3 kB | |

| v5.0 - YOLOv5-P6 1280 models, AWS, Supervise.ly and YouTube integrations.tar.gz | 2021-04-11 | 1.0 MB | |

| v5.0 - YOLOv5-P6 1280 models, AWS, Supervise.ly and YouTube integrations.zip | 2021-04-11 | 1.1 MB | |

| yolov5x6.pt | 2021-04-11 | 284.5 MB | |

| yolov5x.pt | 2021-04-11 | 176.1 MB | |

| yolov5s6.pt | 2021-04-11 | 25.8 MB | |

| yolov5s.pt | 2021-04-11 | 14.8 MB | |

| yolov5m6.pt | 2021-04-11 | 72.4 MB | |

| yolov5m.pt | 2021-04-11 | 43.1 MB | |

| yolov5l6.pt | 2021-04-11 | 155.2 MB | |

| yolov5l.pt | 2021-04-11 | 94.6 MB | |

| Totals: 12 Items | 883.1 MB | 2 | |

This release implements YOLOv5-P6 models and retrained YOLOv5-P5 models. All model sizes YOLOv5s/m/l/x are now available in both P5 and P6 architectures:

-

YOLOv5-P5 models (same architecture as v4.0 release): 3 output layers P3, P4, P5 at strides 8, 16, 32, trained at

--img 640:::bash python detect.py --weights yolov5s.pt # P5 models yolov5m.pt yolov5l.pt yolov5x.pt * YOLOv5-P6 models: 4 output layers P3, P4, P5, P6 at strides 8, 16, 32, 64 trained at

--img 1280:::bash python detect.py --weights yolov5s6.pt # P6 models yolov5m6.pt yolov5l6.pt yolov5x6.pt

Example usage:

:::bash

# Command Line

python detect.py --weights yolov5m.pt --img 640 # P5 model at 640

python detect.py --weights yolov5m6.pt --img 640 # P6 model at 640

python detect.py --weights yolov5m6.pt --img 1280 # P6 model at 1280

:::python

# PyTorch Hub

model = torch.hub.load('ultralytics/yolov5', 'yolov5m6') # P6 model

results = model(imgs, size=1280) # inference at 1280

Notable Updates

- YouTube Inference: Direct inference from YouTube videos, i.e.

python detect.py --source 'https://youtu.be/NUsoVlDFqZg'. Live streaming videos and normal videos supported. (https://github.com/ultralytics/yolov5/pull/2752) - AWS Integration: Amazon AWS integration and new AWS Quickstart Guide for simple EC2 instance YOLOv5 training and resuming of interrupted Spot instances. (https://github.com/ultralytics/yolov5/pull/2185)

- Supervise.ly Integration: New integration with the Supervisely Ecosystem for training and deploying YOLOv5 models with Supervise.ly (https://github.com/ultralytics/yolov5/issues/2518)

- Improved W&B Integration: Allows saving datasets and models directly to Weights & Biases. This allows for --resume directly from W&B (useful for temporary environments like Colab), as well as enhanced visualization tools. See this blog by @AyushExel for details. (https://github.com/ultralytics/yolov5/pull/2125)

Updated Results

P6 models include an extra P6/64 output layer for detection of larger objects, and benefit the most from training at higher resolution. For this reason we trained all P5 models at 640, and all P6 models at 1280.

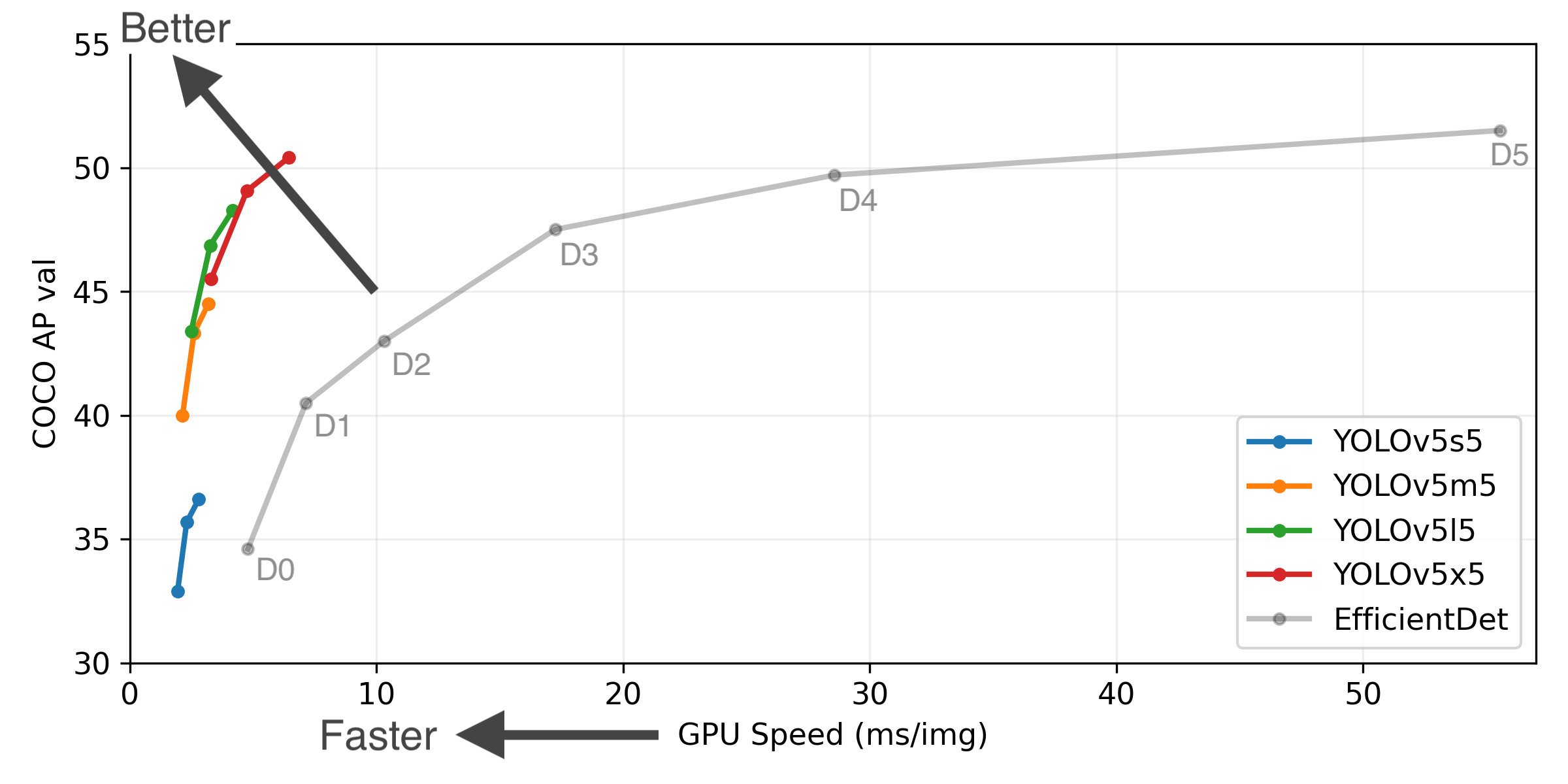

YOLOv5-P5 640 Figure (click to expand)

Figure Notes (click to expand)

* GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. * EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8. * **Reproduce** by `python test.py --task study --data coco.yaml --iou 0.7 --weights yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`- April 11, 2021: v5.0 release: YOLOv5-P6 1280 models, AWS, Supervise.ly and YouTube integrations.

- January 5, 2021: v4.0 release: nn.SiLU() activations, Weights & Biases logging, PyTorch Hub integration.

- August 13, 2020: v3.0 release: nn.Hardswish() activations, data autodownload, native AMP.

- July 23, 2020: v2.0 release: improved model definition, training and mAP.

Pretrained Checkpoints

| Model | size (pixels) |

mAPval 0.5:0.95 |

mAPtest 0.5:0.95 |

mAPval 0.5 |

Speed V100 (ms) |

params (M) |

FLOPS 640 (B) |

|

|---|---|---|---|---|---|---|---|---|

| YOLOv5s | 640 | 36.7 | 36.7 | 55.4 | 2.0 | 7.3 | 17.0 | |

| YOLOv5m | 640 | 44.5 | 44.5 | 63.1 | 2.7 | 21.4 | 51.3 | |

| YOLOv5l | 640 | 48.2 | 48.2 | 66.9 | 3.8 | 47.0 | 115.4 | |

| YOLOv5x | 640 | 50.4 | 50.4 | 68.8 | 6.1 | 87.7 | 218.8 | |

| YOLOv5s6 | 1280 | 43.3 | 43.3 | 61.9 | 4.3 | 12.7 | 17.4 | |

| YOLOv5m6 | 1280 | 50.5 | 50.5 | 68.7 | 8.4 | 35.9 | 52.4 | |

| YOLOv5l6 | 1280 | 53.4 | 53.4 | 71.1 | 12.3 | 77.2 | 117.7 | |

| YOLOv5x6 | 1280 | 54.4 | 54.4 | 72.0 | 22.4 | 141.8 | 222.9 | |

| YOLOv5x6 TTA | 1280 | 55.0 | 55.0 | 72.0 | 70.8 | - | - |

Table Notes (click to expand)

* APtest denotes COCO [test-dev2017](http://cocodataset.org/#upload) server results, all other AP results denote val2017 accuracy. * AP values are for single-model single-scale unless otherwise noted. **Reproduce mAP** by `python test.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65` * SpeedGPU averaged over 5000 COCO val2017 images using a GCP [n1-standard-16](https://cloud.google.com/compute/docs/machine-types#n1_standard_machine_types) V100 instance, and includes FP16 inference, postprocessing and NMS. **Reproduce speed** by `python test.py --data coco.yaml --img 640 --conf 0.25 --iou 0.45` * All checkpoints are trained to 300 epochs with default settings and hyperparameters (no autoaugmentation). * Test Time Augmentation ([TTA](https://github.com/ultralytics/yolov5/issues/303)) includes reflection and scale augmentation. **Reproduce TTA** by `python test.py --data coco.yaml --img 1536 --iou 0.7 --augment`Changelog

Changes between previous release and this release: https://github.com/ultralytics/yolov5/compare/v4.0...v5.0 Changes since this release: https://github.com/ultralytics/yolov5/compare/v5.0...HEAD

Click a section below to expand details: